AI > 🗨️🤖

There's more to AI than Chatbots!

Talking to organisations at the start of the AI maturity journey, there’s one immediate observation. You mention AI, and the inevitable response is “slap a chatbot on it.” And yet there is so much more that AI can do! And that’s not even going into the fabled future land of self-crashing cars.

This post presents a framework to selecting good use-cases for AI in your daily work. This should apply both to how you use AI as well as how you build AI-powered features into your product.

You’re (probably) treating AI wrong

With all the hype coming out of Silicone Valley and all the new vibe-coding tools that you’re supposed to be using to automate your everything, one would think that AI is a silver bullet, a magic hammer, and a business moat all rolled into one.

Sorry sunshine, it ain’t.

There are two things that are core to any knowledge work (as opposed to physical work), which are creative and critical thinking. AI, despite the hype, is far from it — it’s a regurgitation of the probable average. You still need to be in charge of those aspects, directing creativity and doing the critical thinking work.

AI can be sometimes be used for automating other tasks that take away from your time doing creative work and critical thinking, and, y’know, talking to customers, and that’s great — but why were you spending so much time on low-value tasks to start with? There might be something else going on which needs to be solved first (like building an enlightened team that doesn’t need so much hand-holding).

So being good with AI to do your PM job is good, but only useful if you use it for the primary activity of a PM — communications. It’s great to build rapid prototypes or improve your writing (whether stylistically or as a thinking partner). It absolutely sucks when you use it to replace communications, by doing the thinking and writing for you. Creating beautifully formatted pages on pages of PRDs is only useful in organisations that think the value of PRDs is in having them, not in the research that went in to building them and the discussions around them to build shared understanding.

But what about your product? There it’s even worse. All this rapid prototyping and speedy coding means that if you can build it that fast, so can your competitors. If you had a bright idea and put it out, it won’t take much to replicate. And that’s beside the fact that the code produced is often buggy and insecure; after all, coding is only a small portion of what a developer does.

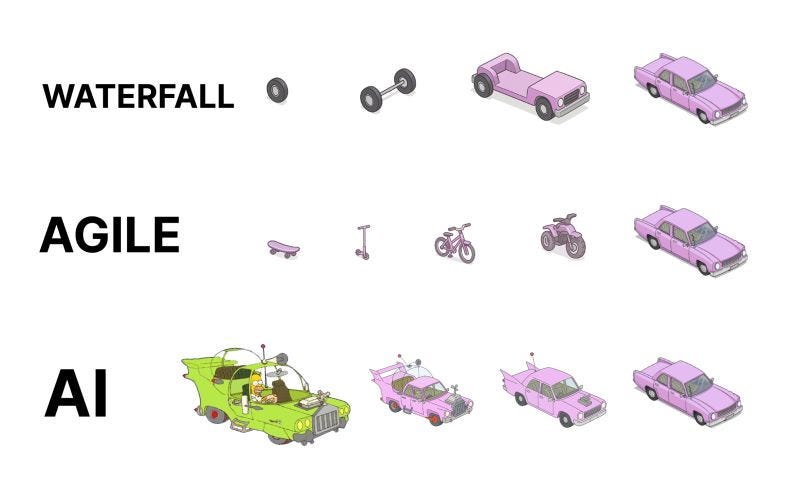

I’ll leave this as a tongue-in-cheek illustration of trying too many things, too fast:

Remember:

Doing all the things faster isn’t always a good idea. Automating tedium is great, automating critical and creative thinking — the things that build product taste — is not.

The value of technology and cost moats will erode, and you’re left with the human-centric distinct advantages — like networks for B2C or large-scale B2B, and relationships for Enterprise.

How to think about integrating AI

There are two kinds of solutions you can integrate into your product (or workflows, for that matter).

Data-centric

Let’s start with using proprietary data and building your own models. This will be very domain specific, but you should think about the general principles:

Life impact

All machine learning is probabilistic, so it will go wrong at some point. When it does, what’s the worst case scenario? It’s one thing to make a bad song recommendation, it’s another to deny someone financial benefits.

Legal and Moral rights

You should have legal rights to the data (ref what is currently going on with all the copyright lawsuits). But you should also have moral rights. Just because you quietly changed your T&C so that you can now legally train models on user data, doesn’t make it right — and your users will show their displeasure with their wallets.

Human-centricity

The model should aid humans, not rob them of agency. It should be build on inclusive data sets. It should minimise discrimination and allow for the inherent randomness of human lives, rather than codify existing societal biases.

Explainability

Applications should present transparent results, and any decision-making should be explainable and the inner logic interpretable.

When things go wrong, there should be a clear path to escalation to humans, who could review and revert any automated decisions.

The usual

All of the above is on top of data privacy, regulatory compliance, cybersecurity, environmental and social impact, etc. The application, including all the hosted data, should be secure and robust, reliable in its performance, and assured to remain so with strict governance.

Yes, I know you can make more money by doing the opposite. That’s how ‘Big Tech™️’ has been running for years now. But in your case, you’ll likely face ruin by rushing to market with ill-conceived, biased models that over-promise and under-deliver, where the only reason no one can tell it’s so is because there’s no way to raise complaints or understand why it happened.

I happen to believe being humanity comes before profit. Even if you don’t, you might be surprised that it’s actually also better for business, at least in the long run.

General purpose

When it comes to integrating general-purpose models into your product (LLMs, multimedia generators, or the recent crop of multi-modal models), most of the above principles still apply. Think how your app or feature might impact human lives, and consider all the biases that went into training generative models.

But faced with the current hype around LLMs and the over-promising that comes out of Silicone Valley, this is usually where the ‘slap a chatbot on it’ crowd jumps on the AI train. Not sure what to do? Why not let the public do anything and everything by asking in plain English? Well, because it doesn’t, for a start, and because it’s often a shitty UX, for another.

The first time you experience the emergent properties of LLMs in ‘figuring out’ how to write an answer based on input text (RAG) or list the steps it needs to perform to achieve a task is pretty magical. But getting an AI to follow a set process (algorithm) for any meaningful length is not reliable at best, ‘research’ tools rely on finding existing data, and ‘reasoning’ models actually perform worse than regular LLMs on anything outside their training data. Can you really afford to rely on a technology that goes wrong often enough to disappoint users? Or that has been known to cause significant backlash even when it does work?

Side note: remember that those telling you to “build now because by the time you do the technology will be there,” have a financial interest in hooking you on it. I’d like to see that even approach sensibility before I trust these claims, because as far as I can tell it will require materially different architectures that current models.

On the other hand, a key observation is that virtual assistants in the form of a blank prompt (“Ask me anything! I can do everything!”) is a poor user experience. First, it will take a long time for the technology to mature sufficiently to be trusted. Second, users aren’t experts in prompt engineering or thinking up solutions.

Both these observations indicate that you will need to think up a more structured UX and build the UI accordingly. You can then delegate tasks to “agents” where they don’t actually have any agency — that part should be the responsible of the users. In that respect they can assist and speed up, by helping the user think (that shallow level “have you thought of these 10 considerations that might apply”) and do (execute specific tasks, that can be constrained, validated, and controlled for blast radius when they go wrong).

In Summary

You can do a lot of good with AI, if you pay attention to all the dangers and pitfalls, avoid the hype, and plan accordingly. Chatbots aren’t the best user interface, or even the most interesting use case. Doing things ‘faster’ isn’t a replacement for doing things well.

You should be playing with AI, exploring capabilities and limitations, but don’t think putting in “AI” (of whatever flavour”) is a replacement of actual understanding of your customers, their needs, and the business model of giving them a solution.