How not to do AI

Assuming you're not in cancer research.

I’ve written before on the Ethics of AI, calling for responsible product development. I followed that with the whimsically titled Maslow's Hierarchy of AI Needs, about the maturity journey that most companies go through these days as they suddenly need an “AI strategy”.

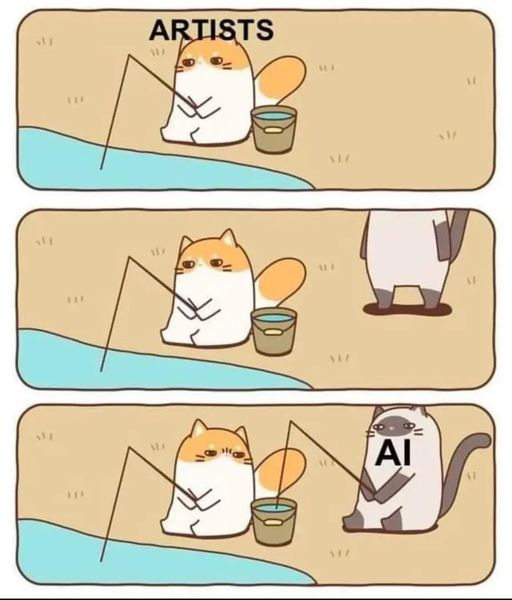

This column is about what Generative AI is actually good for, in the vague hope of shortening its hype cycle (yet another previous post). I’ll assume you read the previous posts, but as a recap — this is how half the world (the half not drinking the Silicone Valley Koolaid) feels about AI:

Bear with me, I’m not a Luddite. I am a creator (I write novels), but I have also been developing AI-powered applications for the past 6 years. I’ve also been around to see previous hype cycles.

So I’d like to think I have a valid perspective on both sides.

Let’s start with the absolute crap.

OpenAI is openly admitting it needs to circumvent copyrights to make money. Yep, Sam Altman must come up with some legal pretzel-twisting like "copyright law does not forbid training" or else risk being thrown out of the billionaire’s private spaceship club. Poor little Sam.

There’s not even the pretence of “the good of humanity.” Once ‘Open’ AI went closed with GPT 3 and the move to commercialise, it’s all about the money 🤑. What do you think of a business model that’s basically “I can only profit if I steal”?

And before you start with “AI is the next evolutionary Big Thing™️”, think where you might have heard it. That’s right, all the AI companies that need the bubble to continue growing, so the VCs who invested in them make money. Ulterior motive, anyone?

See, Generative AI, despite what you might have heard, is — and always will be, because that’s how it’s built — the lowest common denominator. The lowest common denominator of “stuff I found on the internet”, at that.

These models are built by calculating the most probable response, as in explicitly aiming at the average. So much so, that if you train AI on AI-generated content, you’ll create artificial stupidity. This means that they cannot create anything new, even if they had any consciousness behind them.

How bad is it? A company that replaced its creative writing team with AI-generated content, still has to employ humans to make the machine-generated content sound human. It’s a race to the bottom. It will end just like the “great offshoring”, when executives learnt that letting the CFO cut costs by cutting customer support or developing-by-spec ended up losing the company money.

For those who think that “this is the worst AI will even be” and it will only improve from now on, consider this. If we look at numbers, this was an image bandied about when GPT 4 came out, comparing sizes:

And yet, according to LMSYS Chatbot Arena Leaderboard, the difference in scoring is less than 25% better: 1068 for ChatGPT-3.5-Turbo, vs 1316 for ChatGPT-4.o-Latest. We’ve hit the diminishing return plateau, with exponential (this 10x to 100x) increase in size and insane compute costs, that result in a very small fractional increase in benefit. This might explain the need to hype up AGI and ignore legal restrictions.

Let me remind you again that those pushing the AGI or Singularity messages are driven by the need to hype up their own companies to drive VC valuations. While there will undoubtedly be improvements in the models, they will not come about from sheer size or from violating copyrights.

Basically, if your business model requires that you change the law to ignore human rights, perhaps you don’t have the right product, let alone the right business.

The fundamental error

Leaving the massive copyrights issue aside for a moment (we’ll get back to it, don’t worry), consider again how LLMs are built around predicting the next statistically plausible token from a gross average. It’s not good at the extremes — either accuracy or creativity.

To a degree, you can solve the accuracy issues (Perplexity is doing this rather well). Creativity is something else.

If you want to understand why that is so in more depth, I urge you to read these two amazing New York Times pieces (please! they’re amazing and important):

ChatGPT Is a Blurry JPEG of the Web does a wonderful job of explaining how the training condenses everything like a lossy jpeg compression, and how it’s basically returning a statistical average — not anything original.

Why A.I. Isn’t Going to Make Art presents an interesting distinction between skill and and intelligence, and how generative models are pretty much neither. As a striking example, your child’s note with “I love you” on it is absolutely average in terms of originality or content — but that’s entirely beside the point.

I’ve written a similar message in the post I mentioned above: communication is the transfer of ideas between one human and another. Art doesn’t exist in a vacuum, but is a transmission of experience from the artist to the consumer, to express and challenge perceptions.

You can, of course, train an LLM on curated “great” literature, imagery, music, etc. The quality produced by the model would be much higher, as you’ve shifted what “average” is. But that isn’t creativity. Getting a model to generate a technically flawless image means nothing, because it has no intention behind — unlike, say, the crappy drawings of stick people all 4 year old make as they learn to draw and express emotion. The drawing of me with beard stubble all over my face including my forehead, is framed on my desk, something no AI image would ever be.

Usually at this point, AI apologists will make arguments about “democratizing the creative expression”. Kinda like this :

While it’s true that you normally see only the best results (not the 50 crap ones, unless they are so wrong the originator shared them for hilarity), that isn’t the point. Creating something value requires intent and effort.

Claiming that AI generation is the intent without the effort, is like setting your alarm clock to go to the gym, but using a forklift to do the exercises. Or, to put it in terms familiar to the tech world, it’s like thinking a start-up idea is all it takes and expecting an exit without putting the effort of building the company.

As the saying goes, Genius is 1 percent inspiration and 99 percent perspiration. See, it’s in the effort of creating that you often realise the intricacies and complexities of the idea. It’s why the value of preparing strategy documents lies more in writing them than reading them. The ‘perfect PRD’ is the one that gets discussed and explored with the team, not a templated wall of verbiage.

Inspiration lies in working through all the considerations and building up the skill, and in then communicating thought-through ideas to others.

Getting it massively wrong

With all the rush to add AI to everything, many are getting it wrong. Outside of the wrong implementation (like the Air Canada case I mentioned before), I’m talking about a massive disconnect between a company and its target audience.

Two examples from the creator space that popped up in the past week.

Draft2Digital, a service that allows authors to publish on multiple platforms, surveyed their authors if they’d be interested in having an LLM trained on their work. Even though it was a survey about potential interest and offered pay, it still caused a massive backlash. (EDIT: They have published the results, clearly indicating they will not be pursuing AI licensing any further; Good to see that they are listening to market feedback).

Around the same time, NaNoWriMo — the National Novel Writing Month, a non-profit that has been encouraging people to just write and create for over two decades — has published their stance in support of AI. Their justification was that categorical denial of AI is classist and ableist, as not everyone has the the means or the brains (their words!) to write. Not only have many, many authors railed against it and deleted their accounts, two board members quit, and many neurodivergent authors have clamoured against the infantilisation and patronising tone which does more harm.

Those two organisations show that they have disengaged from their customer base to such a degree, that they no longer understand them. This is important, and we’ll come back to it later.

So what is AI good for?

As a first step, understand that there is a lot more to “AI” than just “Generative AI”.

It is a field with decades of research, and many applications. There are potential uses you might find applicable for anything from basic NLP and vector searches, to building own models on private, ethical datasets to solve hard problems. LLMs and diffusion models also have intrinsic value for research, even if it is to teach us what intelligence is not.

But these cases are not what we’re discussing here. Returning specifically to generative AI, there are use-cases where that unimaginative, “completely average” mind-numbingly repetitive output is what’s actually required. Legal briefs, for example… just kidding! But there are situations where you’d want a simpler, clearer language. Presenting options to a human to get them started may aid the humans in getting the content ready for the target audience quicker.

A similar case can be made for diffusion models and generated multimedia (though note my background leans more towards NLP). Getting a hero-banner image was never the problem for marketers. You either pick some stock image, or you need something very specific and hire a creative team to realise the vision.

HINT: It’s not marketing speed-creating content. If you think massively generating bland content is the key to success, you probably think “SEO” is a business model. And if you believe that, I have a $2,999 online course to sell you for just $199 — today only!

Sam Altman is finding out the hard way that he can’t make money building products for the plagiarism cesspit of content farms click bait. This is the stuff that already takes up over 50% of the internet but is such low quality that Google puts it on the third page of search results.

No, sorry to disappoint. Good content and products come first. In the same way that SEO is just a tool in helping users reach and consume this content, AI does not produce the end product itself.

Think where you might use Grammerly or ProWritingAid. Coincidentally, for exactly the same reasons I hate them as an author — they strip the soul of the text, and get it to a bland common denominator. Bad for literature, good for simplifying government policy documents.

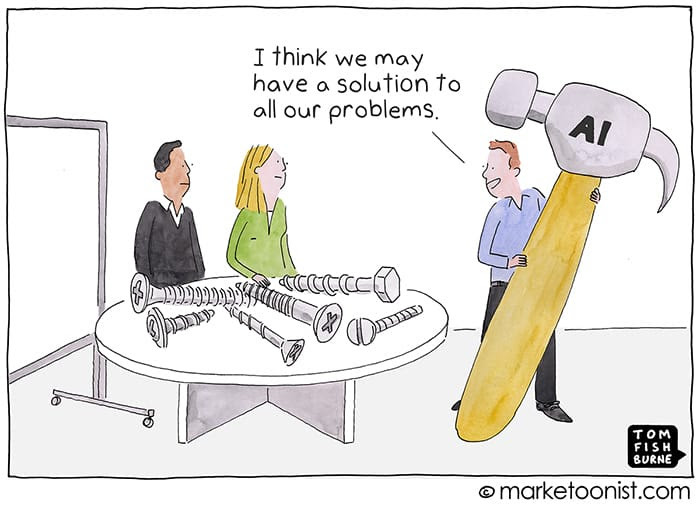

This is part of the problem: intelligence isn’t the ability to string words together into coherent sentences. The LLM hype is born from taking a specific tool or technique and trying to apply it for general problems. On the surface the massive data sets make it look plausible, but when you dig into it you find that it’s not, and cannot, solve those problems. You’d be better served in understanding the power and limitations of the tool, and using it for what it can do.

How to build responsible AI products

First, is to identify the use-cases where Gen AI is the right tool, where ‘good enough’ can provide benefits without harm. You can start by identifying your core value prop and checking how AI might enhance it, by answering some questions like:

Who are your customers?

What are their needs?

How do you serve those needs?

Which type of AI can help you better serve that need?

How might they react to your addition of AI?

What are other considerations on the top of their mind?

Don’t assume that if you’re excited, they will be too.

How can you do so in a way that is ethical and supportive of society and the environment?

If that sounds familiar, it’s because it’s product management 101. Don’t start with a technology — especially one fraught with so many issues — and force it on a problem that it may not fit.

Rather, think of what is the core mission and vision of your product and company, and think which of these various tools can ethically support you in reaching that mission. What is the main ‘job to be done’ or pain point or however you like to think about it, that your users need solved and that AI can provide a better solution for.

Next, pick models and services that are built on ethically sourced data, and keep abreast of developments. The case with multimedia generation is a bit more obvious when it comes to copyright violations, but it’s the same with LLMs. We are seeing better models emerge, and those are often built not just from “anything and everything found on the net”, but instead from highly curated data sets. Of course, “highly curated” doesn’t automatically mean ethical, so we need to push for transparency of data sets, opt-in policies, and compensation of creators from the vendors.

Furthermore, a lot of those are specialised tools, especially around multimedia are geared toward existing professionals. For example, think about creating an explainer video. To get the most of video generation — even AI powered apps — you need to understand how storyboarding works and invest time in creating characters, scenes, and sequences to make something that looks professional. These aren’t “prompt and done.” In a lot of ways, this is similar to how Photoshop and Illustrator didn’t kill photography and graphic design, and getting a license to them doesn’t suddenly make you a graphic designer. You need the foundational education (which involved doing things by hand) in order to utilise those tools.

As the legal dust settles, I think we’ll see more of these specialist tools built upon openness and willingness to compensate those who contribute to those training sets. Smaller, specific, ethically sources models would outperform general models — both in computing costs and in market adoption.

Side note: I explored the future of ethical sourcing of training data in this short story. I do enjoy contemplating and bridging the gaps between technology and creativity, in ways that benefits everyone, and I do so coming from both directions.

Summary

Only if you find cases where humans are tearing their hair out struggling with obtuse language, where there are repetitive tasks based on an existing body of knowledge, where tailoring and simplifying language for accessibility is required, should you reach for generative AI as a possible tool.

In many cases, you are likely to find that the initial AI output, even when “average” is the aim, is still not quite right, and the best use is to assist a human, not replace them. That is particularly evident in any type of creative effort, but even in consumer-facing technology the aim is to meet the person where they are and aid them.

From this, we can project two main use-cases:

Tools for professionals, where you develop new AI by understanding their problems, curating content ethically, and solving real problems for creatives.

Tools for consumers or, where you package 3rd party AI models because you need good enough accuracy and simple generation to help the consumer accomplish tasks in better ways.

In both cases, the key point is that AI can be a wonderful assistive technology. We’ll see the rise of virtual assistants that help with repetitive tedium or specific challenges. But no one wants Hannibal Lecter as their personal assistant, someone who freely cannibalises them. So whenever you do use AI, please always pick a model that was ethically sourced, that doesn’t cause harm to society and the environment.

Thank you for reading to the end! This has ended up way longer than I anticipated (my rants are not an AI-generated word count 😜), but I hope you found value in there. I’d love to know your thoughts about where you see the future going.